“Before You Measure: Why Calibrating a Dial Indicator Really Matters”

Let’s be honest — in the world of machining, it’s often the smallest tools that carry the biggest responsibility. And when it comes to getting precision down to the thousandths, few tools are as trusted (or as quietly dangerous when misaligned) as the dial indicator.

Now here’s a question for you: When was the last time you calibrated yours?

If your answer is, “Uhh… I think it’s fine,” — hey, you’re not alone. A lot of machinists and toolmakers use their dial indicators daily without a second thought. But here’s the thing: if your dial indicator isn’t properly calibrated, it’s like using a ruler that’s almost an inch long. That little discrepancy? It adds up — fast.

Whether you’re squaring a vise, checking spindle runout, or leveling a machine bed, your indicator is your eyes. And if those “eyes” are off, well… you’re basically working blind. That’s a scary thought, right?

I’ve seen it firsthand — an entire run of parts scrapped, hours lost, money down the drain — all because of a dial indicator that hadn’t been checked in months. It’s frustrating, disheartening, and totally preventable.

That’s why this guide exists.

We’re diving deep into calibrating a dial indicator for machine tools — but don’t worry, we’re keeping it light, friendly, and practical. I’m not here to bury you in metrology jargon or bore you with textbook definitions. I’m here to walk you through the real stuff — what calibration really means, why it matters, how it affects your machining accuracy, and most importantly, how you can calibrate your dial indicator quickly and confidently, right from your workshop or inspection station.

Here’s what we’ll cover together:

- What exactly is a dial indicator and how does it work?

- Why calibration is a non-negotiable part of your machining process

- What tools and setups you’ll need to get it done right

- A step-by-step walkthrough of the calibration process

- Pro tips to extend your dial indicator’s accuracy and lifespan

By the end of this article, you’ll not only know how to calibrate a dial indicator — you’ll understand the why behind it, and you’ll feel more confident in every measurement you take. Because let’s face it: machining isn’t just about cutting metal. It’s about trust — in your tools, your process, and yourself.

So grab a cup of coffee (or a micrometer), and let’s get your dial indicator back on point.

Fundamentals of Dial Indicators & Their Use in Machine Tools

What is a Dial Indicator / Dial Test Indicator / Plunger Type

Okay, basics first. A dial indicator (sometimes just “dial gauge”) is a mechanical instrument that converts small linear displacement of its plunger (spindle/rack system) into a rotation of a pointer on a dial face.

There is also the dial test indicator (DTI), where the measuring stylus moves laterally (sideways) — useful in tight spots.

Key types:

- Plunger dial indicator: Moves along the axis of the spindle.

- Dial test indicator: Stylus swings or deflects; used for checking form, alignment, etc.

- Digital variants: Same principle, but with an electronic readout.

In machine tool setups, these indicators help in:

- Checking spindle runout

- Aligning workpieces

- Checking parallelism

- Setup referencing

- Checking small deviation or variation

They’re your “go-to eye” for precision in the shop.

Key Specifications: Range, Resolution, Accuracy, Repeatability, Hysteresis

When choosing and calibrating indicators, these specs are your friends:

- Measuring range (travel): e.g. 0–5 mm, 0–10 mm, etc.

- Resolution (graduation): The smallest increment it can show, e.g. 0.01 mm or 0.001 inch.

- Accuracy / permissible error: How far off the reading can be vs actual.

- Repeatability: If you cycle it back and forth, how consistent are the readings?

- Hysteresis / retrace error: Difference between forward motion and backward motion at the same point.

- Linearity / adjacent error: How closely the reading tracks across the range (is it linear or wavy?).

These terms often appear in indicator specifications or calibration standards.

Roles in Machine Setup, Alignment, Runout Checks

You might use a dial indicator:

- Mounted in the spindle to check if your spindle is concentric

- Checking taper alignment in the machine

- Verifying parallelism between surfaces

- Setup reference (zeroing, edge finding)

- Measuring runout of bores, shafts

Because of that, any error in the indicator itself propagates into your whole machining accuracy. That’s why calibration is not optional.

Why Calibration is Non‑Negotiable

Let me be real: many shops skip calibration because “it’s too time-consuming” or “we use them only for setup, not final measurement.” But that’s risky.

Errors can creep in via wear, shock, dirt, heat— and those errors stack up. A dial indicator that’s off by a few microns might not kill one part, but across many parts over time, you’ll see drift, out-of-spec parts, scrap, and lost reputation.

Also, for ISO or aerospace work, you need documented traceable calibration.

So calibrating your indicators is like tuning your instrument — you want a reliable baseline.

Standards, Traceability & Quality Requirements

Alright, here comes the backbone of calibration: the rules and the traceability chain.

Common Calibration / Metrology Standards

Some of the go-to standards and frameworks include:

- ISO/IEC 17025: The generic requirement for competence in testing and calibration labs.

- ANSI/NCSL Z540‑1: U.S. standard for calibration of measuring equipment.

- JIS / Japanese Industrial Standards: e.g. JIS B 7503 (dial gauge calibration) and related.

- National measurement institutes and their traceability chain (e.g. NIST in the U.S.).

A good calibration lab usually indicates that its processes are traceable to national/international standards. For instance, ATS provides NIST-traceable calibration and issues As Found / As Left reports.

Accuracy Classes, Error Limits, Acceptable Tolerances

Standards often define permissible error zones — e.g. “± 1 graduation,” or per a more formal table. For example, JIS B standard tables specify allowed wide-range errors, adjacent error, repeatability, etc.

When you calibrate, you compare “actual deviation vs ideal” at multiple points and see if the deviations lie within those allowable tolerances.

Traceability Chain: Gauge Blocks, Master Standards, Calibration Labs

Here’s how traceability works in practice (in my experience):

- The national institute keeps a master reference (e.g. laser interferometer).

- That calibrates a lab’s high-precision standard instrument.

- The lab in turn uses that to calibrate your gauge blocks / masters.

- You use those masters to calibrate your dial indicators.

Every step must have documented uncertainties so you can “trace back” your measurement correctness.

Environmental & Handling Requirements

You don’t calibrate in a dirty, hot, unstable room.

Precision demands:

- Controlled temperature (often ~20 °C ± 1–2 °C)

- Reasonable humidity

- Clean, dust-free environment

- Stabilization time: bring the UUT (unit under test) and standards to ambient temperature for an hour or more.

- Proper handling: gloves, gentle motion, no shocks

If you neglect environment, your calibration is garbage.

Equipment & Tools Needed for Calibration

You can’t calibrate properly without the right toys. Here’s what you need and why.

Master Artifacts: Gauge Blocks, Step Gauges, Precision Micrometers

Gauge block sets (grade 0, 1, or 2) are your “truth bars.” Use them to set known distances. For fine calibration, the master’s uncertainty has to be significantly better than the indicator you’re calibrating.

Step gauges or “gauge sets” that provide discrete increments are also useful.

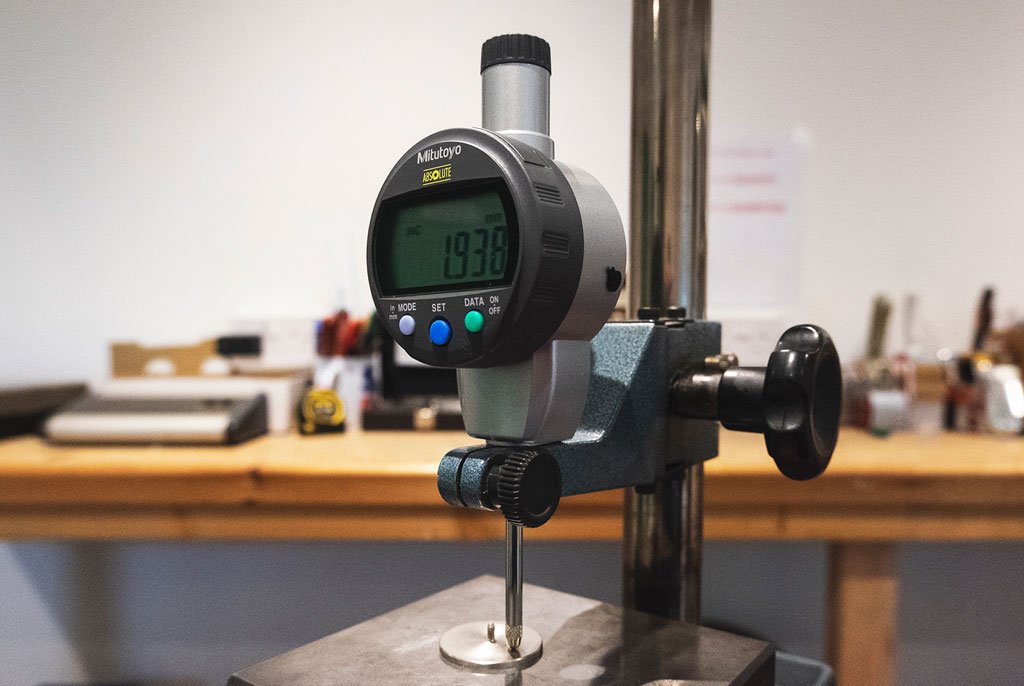

Calibration Testers / Indicator Comparators / Dial Gauge Calibrators

These are specialized instruments (sometimes called “indicator calibrators”) designed to smoothly and precisely displace the indicator’s contact point. Some are motorized, some manual.

In the JIS‑based method, a “calibration tester” with 0.001 mm accuracy is used.

Manufacturers of calibrator devices often describe their features (spindle, resolution, base, etc.).

Stable Reference Surfaces: Granite Plate / Surface Plate

Your indicator and gauge blocks must rest on a flat, stable, rigid surface—commonly a granite surface plate. This ensures you’re not introducing bending or tilt.

Supporting Tools: Indicator Stands, Clean Cloth, Gloves, Magnifier, Lubricant

You’ll mount the indicator via a rigid stand (mag base, pillar stand).

Also keep:

- Lint-free cloth

- Isopropyl alcohol or cleaning agents

- Gloves

- Magnifying glass (for tip inspection)

- Fine oil or light lubricant (when reassembling)

Documenting Tools: Calibration Log Sheets, Software, Charts

You’ll want a calibration worksheet or spreadsheet to record “As Found” and “As Left” for each point.

Some labs use calibration management software, but even a properly formatted sheet works — the key is consistency and traceability.

Step‑by‑Step Calibration Procedure (Shop / Lab)

Okay, this is the heart of the matter. I’ll walk you through a robust procedure — adapt as needed for your shop or lab.

Pre‑Check & Inspection

Before doing any measurements:

- Visually inspect: crystal, dial face, pointer, housing, stylus. Look for cracks, chips, misalignment.

- Check bezel rotation, pointer movement (no drag or sticking)

- Check stylus / contact tip for wear, bend, flatness. Use magnifier if necessary

- Clean everything: use lint-free cloth, alcohol, blow off debris

- Confirm that plunger moves freely (no binding)

If the indicator is significantly damaged, calibration may not fix it — you might need repair or replacement.

Temperature Stabilization & Environmental Prep

Bring both the UUT (indicator under test) and all reference standards to ambient room temperature for at least 1 hour (or longer in tight labs). This reduces thermal expansion error.

Ensure room is stable in temperature, no direct drafts, closed doors, low vibration.

Zeroing / Setting Baseline

Mount the indicator rigidly (stand, magnetic base) on the granite plate. Make sure the stylus is perpendicular to the surface (no tilt).

Use your gauge block or reference zero surface. Bring the stylus into contact gently (preload) and rotate the bezel (or reset) so that the needle reads zero.

This gives you a starting “zero” point from which deviations will be measured.

Checking at Multiple Points Across the Range (Linearity Test)

Here’s the key: test at multiple defined points (e.g. 10%, 25%, 50%, 75%, 90% of travel).

Procedure:

- Stack gauge blocks (or use calibrator) to get known increments (e.g. 0.5 mm, 1.0 mm, etc.).

- Move the stylus forward to the first point, record indicated vs true.

- Continue to all points.

- Optionally reverse back and measure again (to detect hysteresis).

Compare deviations: are they linear? Are errors within tolerances?

This tests the linearity or wide-range error.

Checking Repeatability (Back‑and‑Forth)

For one or more positions, cycle the stylus back and forth multiple times (say 5 cycles) and note the variation. The maximum spread is the repeatability error.

This reveals whether the mechanism is consistent under motion.

Hysteresis / Retrace Error Test

Move the stylus forward to a point, then retract it back to the same point, compare the readings. The difference is hysteresis or retrace error.

Some standards or manufacturer specs limit how much hysteresis is acceptable.

In JIS methods, retrace error is explicitly checked.

Adjacent Error / Local Error

Adjacency or “adjacent error” is evaluating small increments — e.g. step from 0.01 mm to 0.02 mm across many small intervals — and seeing if local deviations are acceptable.

It reveals “wavy” behavior that might not show in gross linear testing.

Measurement Force Considerations

Dial indicators apply a measuring force via a spring (the force of stylus on surface). Any deviation or variation in that force across travel can introduce error.

Standards sometimes specify that maximum force should not exceed ~0.5 N and variation within the direction should be limited.

If your indicator has adjustable force, check it; sometimes different internal springs or settings can affect performance.

Adjustment / Fine Calibration (If the Indicator Permits)

Some dial indicators allow internal adjustments (via screws or shims) to correct zero, reduce error or align the dial train.

If your indicator is adjustable:

- Make small corrections based on calibration data

- Re-check the same calibration points after adjustment to confirm improvement

- Be conservative — pushing too far may degrade uniformity

If it’s non-adjustable or the errors are too large, it’s best to send to a metrology repair house.

Recording the “As Found / As Left” Data & Uncertainties

You should document:

- As Found: the errors before adjustment

- As Left: the errors after adjustment (if done)

- The uncertainty budget: combining error sources (master uncertainty, environmental uncertainty, instrument drift).

- A pass/fail statement per tolerance

- Identification: date, operator, instrument serial, signature

This gives you traceability and audit trail.

Pass/Fail Decision & Labeling

If all errors are within allowable tolerances, mark the indicator as “calibrated,” with a sticker indicating date, due next calibration, and “serial number, lab, operator.”

If it fails, mark “do not use” or “repair needed,” and segregate it from production use.

Special Considerations for Machine Tools Context

Since you’re calibrating for use on machines, there are extra wrinkles to watch.

Mounting the Dial Indicator on Machine Tool

On a mill, lathe, or boring machine, you’ll mount the indicator using magnetic bases, holder arms, or custom fixtures. It’s very important your mounting is rigid and free of flex or vibration.

If the mount bends or moves, you introduce additional error. Always check mounting rigidity before trusting readings.

Angular Errors & Cosine Error (Stylus Angle)

If the stylus is tilted relative to the surface motion direction, you get a cosine error (measured displacement is less than actual).

Keep the stylus as close to perpendicular to measurement direction as possible. Some stylus tips are designed to minimize cosine error within a few degrees.

Often machinists joke: “Don’t tilt your indicator — or your work will pay for it.”

Dynamic Factors: Vibration, Shock, Thermal Drift

In a machine shop, vibrations, temperature drift, spindle heat all affect readings.

During calibration, keep the shop quiet, let machine warm up if doing in-situ, and avoid shocks.

Frequency of Calibration: Shop vs Lab

In a lab, you might calibrate every 6 or 12 months (or quarterly) depending on usage and standards. In a busy machine shop, indicators used daily might need more frequent checks (e.g. quarterly, monthly spot-checks).

Some shops keep a “reference indicator” that is checked weekly against a master to detect drift.

Using Calibrated Indicators in Setups

Once calibrated, use the indicator for:

- Spindle alignment & runout checks

- Checking alignment of machine axes

- Workpiece alignment

- Parallelism checks

- Tool setting & offsets

Because your indicator is traceable, your setups are more trustworthy.

Common Sources of Error & Troubleshooting

Even with good procedure, errors creep in. Here’s what to watch for and how to troubleshoot.

Mechanical Wear, Backlash, Play in Gears

Over time, gears wear or play develops. That produces sloppy motion or inconsistent readings.

If repeatability is poor, inspect the internal movement or send for servicing.

Dirt, Lubricant, Stick‑Slip

Dirt or dried lubricant will cause jerky motion or stick-slip. Clean thoroughly and re-lubricate lightly as needed.

Stylus Misalignment or Bent Tip

A bent or worn stylus tip introduces systematic error. Always check tip shape under magnification. Replace if needed.

Temperature and Thermal Expansion

Even minor temperature variation affects metal dimensions and spring behavior. Always calibrate in a controlled environment.

Parasitic Deflections in Mounting

If your base, holder, arm flexes, you’ll measure false deviations. Use rigid mounting and check for mechanical bend.

Inconsistent Measuring Force

If the internal spring force varies across travel, errors will follow. Some indicators allow “measuring force” tests (e.g. in JIS or JIS-style tests).

Drift and Zero Shift Over Time

After calibration, over time your zero may drift (spring fatigue, temperature cycles). That’s why periodic re-verification is vital.

What to Do When Indicator Fails

If your indicator is beyond repair in the shop:

- Mark it “do not use”

- Send to a specialized repair / metrology lab

- Or replace it

- Always keep backups / spare indicators

Case Example: Calibrating a Dial Indicator per JIS B 7503

Let me walk you through a distilled version of a calibration method based on JIS B 7503 (as I read in research) so you see it in action.

Overview of JIS B 7503‑based Method

A study on “Calibration of Dial Indicator Using Calibration Tester with JIS B 7503 Standard” shows how to calibrate an indicator of range 0–25 mm, resolution 0.01 mm or 0.002 mm.

They used:

- A calibration tester (serial, high-accuracy)

- Gauge block set (5 mm, 10 mm, 20 mm)

- Environmental monitoring (thermo-hygrograph)

- Cleaning, stabilization, careful mounting

Example Instrument, Artifact Set, Steps & Results

For instance:

- Pre-inspect and clean everything.

- Use gauge block 5 mm, bring stylus onto block, zero the dial.

- Raise block stack to 10 mm, record indicated value vs true.

- Continue to 15 mm, 20 mm, etc.

- Reverse motion (retrace) and record hysteresis.

- Evaluate repeatability by cycling multiple times at 10 mm, 20 mm.

- Make minor corrections if instrument permits; then re-check.

They compute the error = indicated – true at each point. These errors must lie within allowed tolerance as per JIS tables.

They also compute measurement uncertainty, combining:

- Calibration tester uncertainty

- Gauge block uncertainty

- Environmental effects

- “Instrument under test” uncertainty contributions

Then decide pass/fail.

Uncertainty Evaluation & Error Budget

For example, if your calibrator has ±0.001 mm uncertainty, gauge blocks ±0.0005 mm, environmental drift ±0.0003 mm, then those sum (root-sum-square) into a combined uncertainty.

If your UUT’s deviations are less than tolerance minus the uncertainty margin, you pass.

Interpretation, Pass/Fail, Adjustment

If “As Found” errors are within tolerance, you’re OK. If borderline, adjust and re-test.

If outside tolerance, either repair, decommission, or send to a lab.

This method provides a disciplined, standardized approach that’s more robust than ad hoc checks.

Best Practices & Tips from the Shop

Let me share from real shop life — some tips and tricks I’ve learned (so you don’t make my mistakes).

Use a “Gold‑Master” or Reference Indicator

Keep one high‑quality indicator (calibrated in a lab) as your workshop standard. Periodically check your other indicators against it. This catches drift early.

Mark Intervals & Schedule

Decide a calibration schedule: e.g. every 6 months, or sooner if heavy use. Mark it clearly on the tool. Don’t leave an expired indicator in use.

Handling, Storage & Transport

- Always store in protective cases

- Avoid shocks, dropping

- Avoid extreme temperature cycles

- Keep them clean and dry

- Label “calibrated / next due.”

Training Operators

Many errors come from misuse.

Train operators to:

- Mount indicators correctly

- Avoid scratching or pushing tip sideways

- Zero gently (no overloading)

- Report anomalies

Maintaining Calibration Records & Audits

Keep a calibration log (manual or digital). Make sure audits can trace any measurement back to the calibration. Treat calibration as part of your quality system.

When to Send to a Professional Metrology Lab

If:

- Errors are outside tolerance after adjustment

- Internal wear or damage suspected

- You need high-accuracy re-certification

- You require accreditation-level traceability

A good calibration lab will provide full documentation and traceability.

“Dialed In and Ready to Roll: Why Calibration is Your Secret Weapon”

So, we’ve gone through a lot together in this guide, haven’t we?

From understanding how a dial indicator works to walking through the full calibration process step by step, you’ve now got the knowledge — and hopefully, the confidence — to take control of your measurements like a true pro.

But let’s not forget the big picture here.

Calibrating a dial indicator for machine tools isn’t just a technical task — it’s a mindset. It’s about valuing accuracy. It’s about taking pride in your craft. And more than anything, it’s about refusing to leave your work to chance.

Because when you take that extra 10–15 minutes to check and fine-tune your dial indicator, you’re not just aligning a needle — you’re aligning yourself with a higher standard of precision. And that matters.

Let me ask you something:

How many errors, misalignments, or scrapped parts could you avoid just by making calibration part of your routine?

The answer? Probably more than you think.

Whether you’re working in a high-end CNC shop, a manual lathe garage, or a quality control lab, a properly calibrated dial indicator is your best friend — the quiet hero behind clean cuts, perfect fits, and trusted tolerances.

And hey, don’t wait until something goes wrong to start caring about calibration. Make it a habit. Mark it on your calendar. Add it to your shop checklist. Trust me — future you (and your parts) will thank you for it.

So next time you zero out your dial, you’ll know it’s not just “close enough.”

It’s spot on.

It’s accurate.

It’s calibrated.

And that’s the kind of confidence every machinist deserves.

Now, go out there and make your work count — one perfect thousandth at a time.

Please read more about the best dial indicator.

FAQs

How often should I calibrate a dial indicator in a machine shop?

It depends on usage, criticality, and quality standards. A typical interval is 6 or 12 months for general use. If the indicator sees heavy daily use, you might check it quarterly or even monthly. Use a “reference indicator” to spot drift in between full calibrations.

Can I calibrate a dial indicator without specialized equipment?

You can perform basic verification (zero check, consistency with gauge blocks) using a granite plate and gauge blocks, but you won’t get full, documented calibration with uncertainty. For high precision or audit-level work, you need a proper calibrator or a metrology lab.

What’s the difference between “verification” and “full calibration”?

Verification is a quick check to see if your indicator is roughly in tolerance (e.g. check a few points). Full calibration is a formal process: checking multiple points, hysteresis, repeatability, computing uncertainties, adjusting (if possible), and producing a certificate.

If my indicator fails calibration, can I adjust it myself?

Only if the instrument allows adjustment (e.g. internal screws or shims) and you have experience. Make small changes, re-check. If errors are large or mechanical damage suspected, better to send it to a metrology repair house.

What is acceptable error / tolerance for a dial indicator in machining?

It depends on the indicator class, resolution, and the usage context. As a rough rule, many dial indicators allow error within ±1 graduation or a few microns over their range. Standards (JIS, ISO) often define acceptance tables. Always compare against the indicator’s specification.